Maybe some of you already know this bug, but it has not occurred too often so i will first tell you the beginning of the story. After rolling out the fort battles globally and some times has gone, we got some reports about hanging fort battles. The times goes down to 0 seconds and then the game just hangs. The next round will never be toggled and because of this nobody could move anywhere not only inside the battles but also outside the battles. So this was of course a serious problem. Indeed we had already set up some nagios monitoring scripts that told us just in time when a battle began to hang but we were forced to restart the java server (that seemed to work so it was something non-deterministic). And as we already blogged, there is currently no journaling implemented so a restart of the server equals a complete battle reset. Don’t worry: I’m currently working on the journaling 😛

![]()

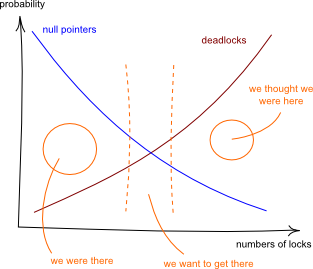

- RL deadlock

We can proudly announce that the bug with hanging fort battles should be fixed now. Because of the fact that it was a non-deterministic problem, it was really pain in the ass to find it, but that was more because it was some kind of “nested problem”. First at all we looked the logs up, we searched for any kind of suspicious entries but we don’t found anything suspicious and that surprised us a bit. First at all we guessed its a deadlock, so we watched out for blocked threads. For all those who don’t know what a “deadlock” is, just take a look at the picture on the right and then it should be bloody clear 😀

We invested some time on trying to reproduce this bug, but without any success. So we had to wait until the symptoms reappear on one of the running daemons to perform some checks and trace analysis on the running application. We thought with our monitoring tools this should be no problem, but it was the opposite. Every time the monitoring notified about a occurrence a sys-admin was faster and restarted the server so it was already running when we logged in 😀 After notifying those to not do anything so we can perform our analysis then problem don’t wanted to reappear for quite a time.

![]()

- The visual explanation of our problem.

During this time we have already implemented a Watchdog that checks for deadlocks or deadlocked monitor periodically in the running application. And then the long awaited moment came, just after we restarted some of the java servers because we made a quickfix update, we got two crashes at the same time. First thing we did was calling the sys-admin staff and remind them to not do anything, guess a not too exhausting wish 😀 We were pretty sure to grep some messages in the logs about deadlocks, but what do we see? Yes, nothing! 🙂 This was in fact strange, so I used jstack to get some information about the current threads, states and whose stack traces but the output affirmed that no thread was deadlocked, so: WTF! So by painfully going manually through the code, tracing what the issue could be, we got the right suspicion about the problem, which was then confirmed by staring at the server screen: the master thread was not there, not running, nothing, so it died. But WTF: This should be logged so something is really messed up. But sometimes the easiest solution for this behavior is the right one: This was never written to the log file and indeed, after checking I found out that the stderr pipe was not logged so i fixed the init script (with some kind of sub-shell piping) and we ended up with some ConcurrentModificationException. This was exactly the opposite of what we guessed at the first point, we had no deadlocks, we just didn’t synchronized enough 😀

Shortly we tracked down the problem (thanks to the now existing log entries) to just one small block of code that we now synchronize and the queasy adventure ended abrupt. But hey, just look at the good part:

- our logging is now working just fine

- we are prepared for deadlocks